Heightmap

Implementation and Optimisation

Procedural Heightmap generation on GPU

Instead of loading a heightmap in the program as a texture generated somewhere else, the first step is to render it ourselves on GPU. To make it procedural, we need the heightmap generation to take as a parameter the position of the point we want to generate, such that when we later move the user current position, the program generates the heightmap again, taking into account the new position. Heightmaps are actually noise texture that are manipulated such that the result, even if generated quasi-randomly, corresponds visually to a physical terrain. This is the reason why often in my code the heightmap is referred to as "noise" or "noise texture".

Since we want to generate a texture, the C++ part of the code looks exactly like a program that renders a quad:

//Code from Noise.h in the source code

const GLfloat vertex_point[] = { /*V1*/ -1.0f, -1.0f, 0.0f, /*V2*/ +1.0f, -1.0f, 0.0f, /*V3*/ -1.0f, +1.0f, 0.0f, /*V4*/ +1.0f, +1.0f, 0.0f};

// buffer

glGenBuffers(1, &vertex_buffer_object_);

glBindBuffer(GL_ARRAY_BUFFER, vertex_buffer_object_);

glBufferData(GL_ARRAY_BUFFER, sizeof(vertex_point), vertex_point, GL_STATIC_DRAW);

GLuint vertex_point_id = glGetAttribLocation(program_id_, "vpoint");

glEnableVertexAttribArray(vertex_point_id);

glVertexAttribPointer(vertex_point_id, 3, GL_FLOAT, DONT_NORMALIZE, ZERO_STRIDE, ZERO_BUFFER_OFFSET);

const GLfloat vertex_texture_coordinates[] = { /*V1*/ 0.0f, 0.0f, /*V2*/ 1.0f, 0.0f, /*V3*/ 0.0f, 1.0f, /*V4*/ 1.0f, 1.0f};

glGenBuffers(1, &vertex_buffer_object_);

glBindBuffer(GL_ARRAY_BUFFER, vertex_buffer_object_);

glBufferData(GL_ARRAY_BUFFER, sizeof(vertex_texture_coordinates), vertex_texture_coordinates, GL_STATIC_DRAW);

GLuint vertex_texture_coord_id = glGetAttribLocation(program_id_, "vtexcoord");

glEnableVertexAttribArray(vertex_texture_coord_id);

glVertexAttribPointer(vertex_texture_coord_id, 2, GL_FLOAT, DONT_NORMALIZE, ZERO_STRIDE, ZERO_BUFFER_OFFSET);

The Vertex Shader is even simpler

//Code from noide_vshader.glsl in the source

in vec3 vpoint;

in vec2 vtexcoord;

out vec2 uv;

void main() {

gl_Position = vec4(vpoint, 1.0);

uv = vtexcoord;

} Heightmap generation

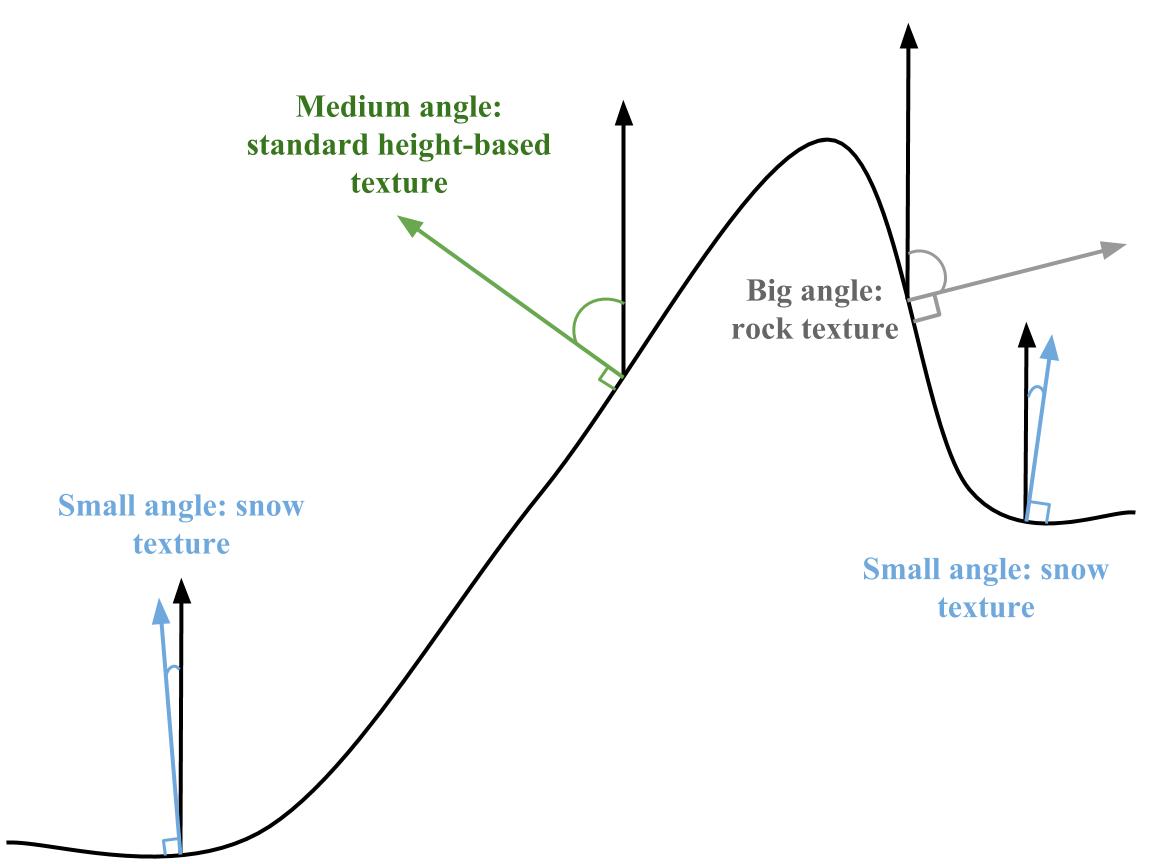

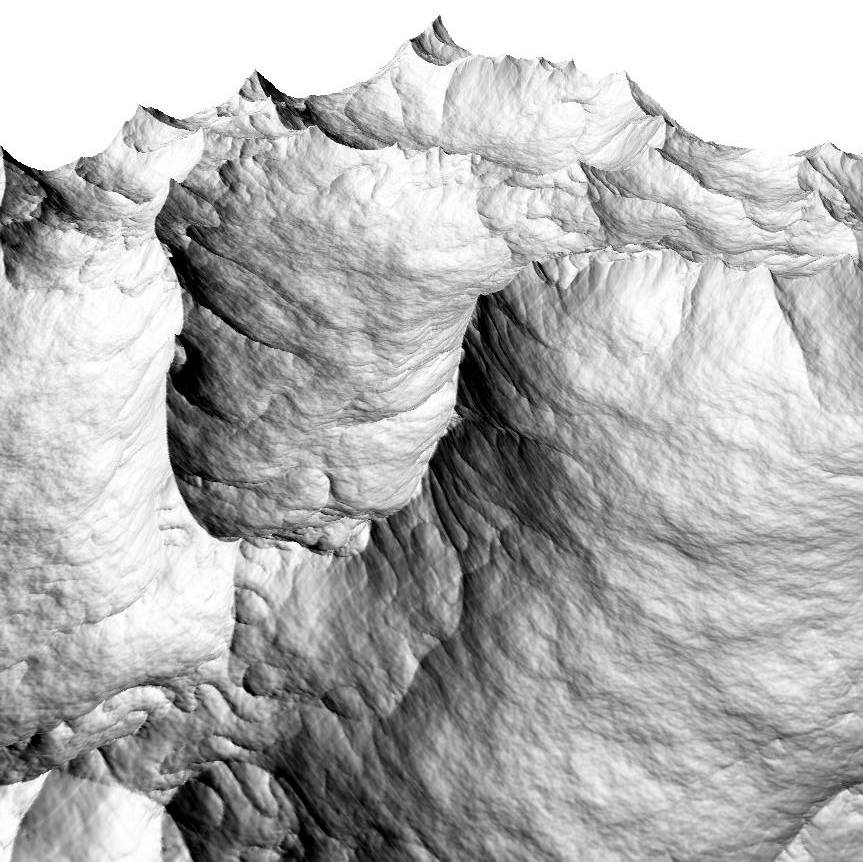

The goal is to find a way to create a heightmap resulting in a realistic mountainous terrain. The first intuition would be to superpose different instantiations of Fractional Brownian Motion, shifted and/or rotated at each iteration. The problem with this approach is that the rendered terrain looks very "roundish", as FBM doesn't generate peaks and trenches.

This paper from F. Kenton Musgrave proposes a Hybrid Multifractal algorithm that generates exactly the kind of peaks and trenches we're looking for, but a simple iteration of this algorithm only produces lines of mountains with very smooth flat areas (cF the "riges" of this paper). The best compromise has been to combine iterations of both FBM and Hybrid Multifractal to get the look needed: (in fragment shader)

//Code from noise_fshader.glsl in the source

float getTerrainHeight(vec2 pos){

vec2 p = pos*density;

float b2 = fbm(p*10)*0.2;

float h1 = hyrbidMultifractal(p/8.0, H, lacunarity, octaves, offset, gain);

float h2 = hyrbidMultifractal(p/3.0, H, lacunarity, octaves, offset, gain/2.0)*2.0;

float h3 = hyrbidMultifractal(p*2.0, H, lacunarity, octaves, offset, gain)*0.3;

return b2+h1+h2+h3-0.8;

} The density variable is a simple float that is used to spread or condensate the heightmap.

By playing around with the position sent to the hyrbidMultifractal function and the scaling applied to the returned height value of this function, we can superpose layers of terrain to get more or less details, and more or less rugosity.

Now that we know how to generate a heightmap point given a UV coordinate, all that remains to do in the main() is to render it on the fragment as the color

//Code from noise_fshader.glsl in the source

void main(){

color = getTerrainHeight(uv);

}

And the heightmap is done ! All that remains to do is create a 1-channel float Framebuffer (using the GL_R32F format), bind it, render the heightmap to texture, and use it like one would use a heightmap loaded from a file.

Managing the displacement

When generating the heightmap that way, we don't have many other choices than to leave the camera stationnary and actually move the position used for computing the heightmap. To do so, we have to store the cumulated ground displacement in a variable. Let's say the up-vector is (0,1,0), each time the C++ program wants to move in a certain direction, we have to update the heightmap displacement vector as displacement += vec2(move.x, move.z), then pass this vector to the fragment shader of the heightmap generator, and use it to compute the new heightmap as getTerrainHeight(uv + displacement)

Optimizing the displacement

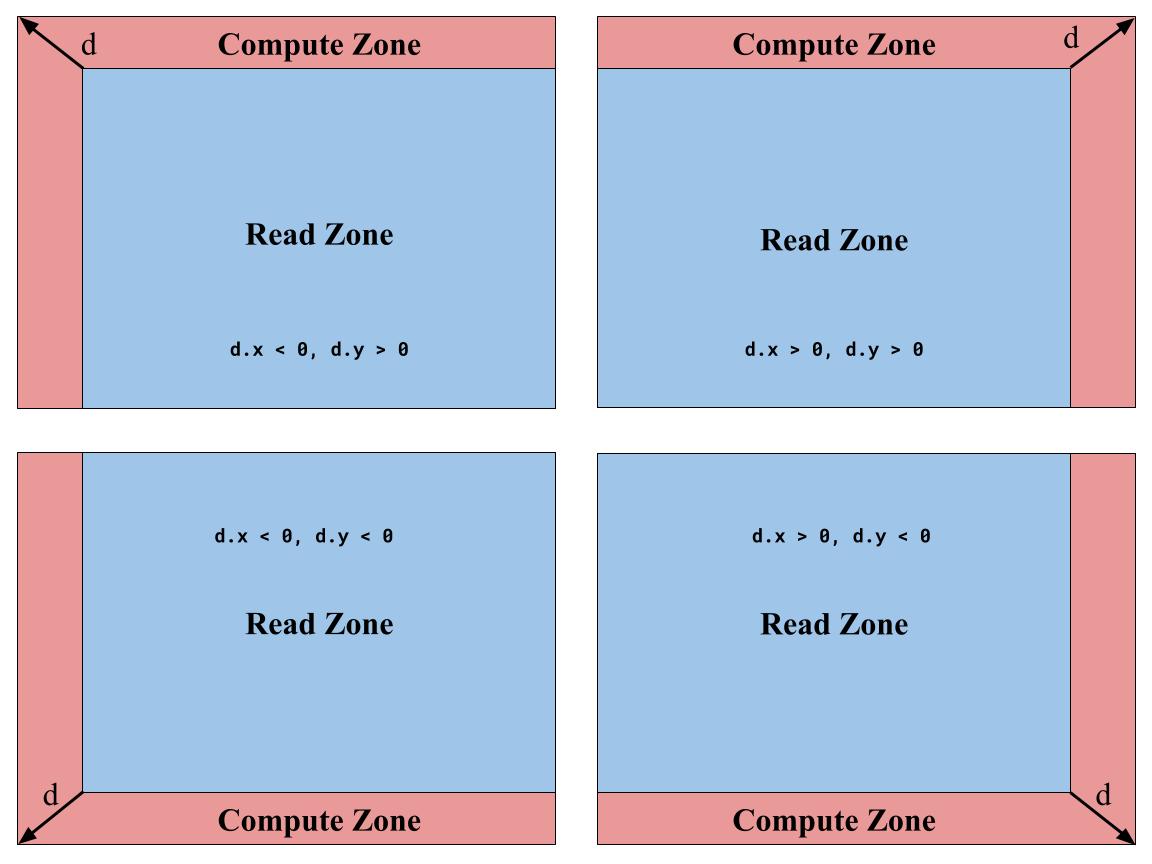

With this implementation, the heightmap is indeed infinite, but the smallest displacement results in the code computing the whole heightmap again. But, as shown on the schema, when moving along the displacement vector d, only a small fraction of the heightmap is new (in red). To solve this waste of time and ressources, the solution would be to read the previous value of the heightmap when possible, and compute only the points when necessary.

To do so, we need not only to pass to the fragment shader the vector of cumulated displacement, but also the vector representing the new displacement at that frame. This vector allows us to define which points of the texture we have to compute, and which we can read from our previous computation.

We now have to be careful with our use of displacement and new_displacement. When computing new points, we use the total displacement as before. But when reading from the previous heightmap, the point previously generated already took into account the cumulated displacement as it was during the previous loop. So now, we only have to shift the UV we want to read by the new displacement, giving us the following code:

//Code from noise_fshader.glsl in the source

//Compute the new point if necessary, read it if possible

bool xReadZone = (new_displacement.x > 0) ? (uv.x < (1.0 - new_displacement.x)) : (uv.x > -new_displacement.x);

bool yReadZone = (new_displacement.y > 0) ? (uv.y < (1.0 - new_displacement.y)) : (uv.y > -new_displacement.y);

if(xReadZone && yReadZone){

pos = uv + new_displacement;

h = readLastBuffer(pos);

} else {

pos = uv + displacement;

h = getTerrainHeight(pos);

}

One last detail to take into account is that if we allow interpolation in the float framebuffer used for the heightmap, each time we move (up to 60 times per seconds), we smooth the heightmap, resulting in just a few seconds in a roundish terrain. The solution to this problem is to discretize the cumulated and new displacement vector in function of the dimensions of the framebuffer:

//Code from Noise.h in the source

float roundToPrecision(float precision, float value){

return precision * round(value/precision);

}

vec2 roundToPrecision(float precision, vec2 value){

float x = roundToPrecision(precision, value.x);

float y = roundToPrecision(precision, value.y);

return vec2(x,y);

}

void move(vec2 delta){

//We set the displacement to a multiple of the "texel size" such that the

//interpolation doesn't smooth the terrain

vec2 d = roundToPrecision(1.0/float(tex_size_), delta);

new_displacement_ = d;

displacement_ = displacement_ + d;

displaced_ = true;

} Buffers for optimization

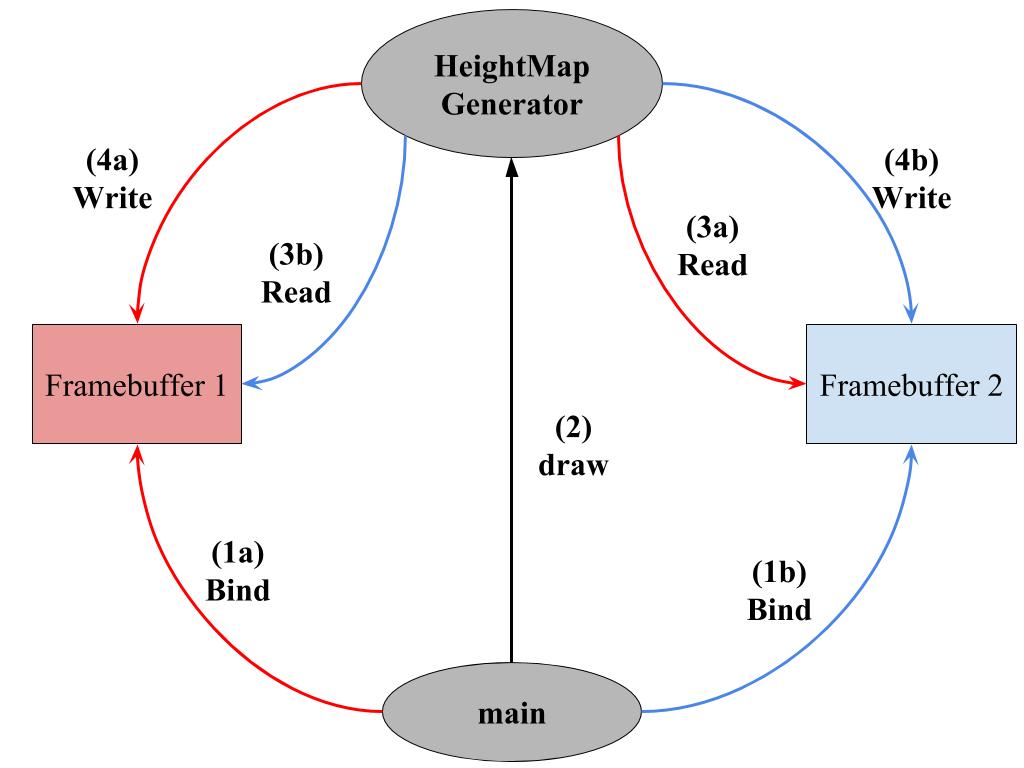

The optimization is almost ready to go now. The only problem remaining is that we want to read the previous heightmap in order to generate the updated one. Unfortunately, it is not possible to read from a FrameBuffer while also writing in it. So we have to create a second buffer exactly in the same manner as the first one. In addition to this cloned buffer, we need to create a variable to store in which buffer we last wrote (e.g. int last_buffer = 1 //or 2), along with a variable pointing to the current framebuffer texture. Where we previously passed the framebuffer texture, we now pass this variable. Moreover, at the first frame, we have to tell the shader code of the Heightmap Generator that we want it to render the whole heightmap (since we have nothing to read), and then at each frame:

- Bind the buffer that is not

last_buffer - Tell the shader code from the Heightmap Generator to read in the texture from

last_buffer - Draw the heightmap while reading in the previous texture as explained

- Unbind the buffer

- Update the

last_bufferint - Update the

current_bufferGLuint

Given that modified represents whether or not some parameter of the heightmap changed, displaced is true if there is a new displacement since the last frame. Here is what the code looks like in the fragment shader of the heightmap generator:

//Code from noise_fshader.glsl in the source

float readLastBuffer(vec2 uv){

return (last_buffer == 1) ? texture(height_map, uv).r : texture(height_map2, uv).r;

}

//Compute the new point if necessary, read it if possible

void main() {

//First Frame

if (pass == 1){

color = getTerrainHeight(uv);

//Any other frame

} else {

vec2 pos;

float h;

if(modified > 0){

pos = uv + displacement;

h = getTerrainHeight(pos);

} else {

if(displaced == 0){

pos = uv;

h = readLastBuffer(pos);

} else {

bool xReadZone = (new_displacement.x > 0) ? (uv.x < (1.0 - new_displacement.x)) : (uv.x > -new_displacement.x);

bool yReadZone = (new_displacement.y > 0) ? (uv.y < (1.0 - new_displacement.y)) : (uv.y > -new_displacement.y);

if(xReadZone && yReadZone){

pos = uv + new_displacement;

h = readLastBuffer(pos);

} else {

pos = uv + displacement;

h = getTerrainHeight(pos);

}

}

}

color = h;

}

}

And here is the C++ code where we cycle between the buffers:

//Code from main.cpp in the source

// read buffer 1 write in 2

if(last_buffer == 1){

height_buffer2_.bind();

initViewport(tex_size,tex_size);

noise_.drawHeightMap(2, last_buffer);

height_buffer2_.unBind();

last_buffer = 2;

current_noise_buffer_ = fb_tex_noise_2_;

//read buffer 2 write in 1

} else if(last_buffer == 2) {

height_buffer_.bind();

initViewport(tex_size,tex_size);

noise_.drawHeightMap(2, last_buffer);

height_buffer_.unBind();

last_buffer = 1;

current_noise_buffer_ = fb_tex_noise_;

}